Remarks on Structure Constrained Ontology

Read: Paper

Sensory systems and patterns

The Sensory system (e.g. - the aggregate unity of our visual spatial system, auditory system, olfactory system, etc.) is organized by ontological concepts. I may see a "barn" and think:

- of it as a "building"

- as an item in a plan to store something in the future

- etc.

We associate "higher level" structures (linguistic items) with these basic phenomenal patterns.

Symbol Grounding Problem

I argue that there is no symbol grounding problem - our concepts, language, and linguistic frameworks are implicitly united. There are only relationships between linguistic modalities. The connection between the linguistic items used in cognition and structured sensory representations (or presentations) is discussed further in the paper but is summarized here.

Our sensory systems are partly organized by higher level concepts defined by models and theories.

Models and theories are linked to regimentations of our sensory percepts - thereby linking symbol (in cognition) to persistent patterns produced by our sensory systems.

The Symbol Grounding Problem is how classical symbolic computationalism could associate the items of thought (cognition) or linguistic tokens with something in the world. Essentially, the very problem that Wittgenstein was describing earlier (problems with representation and correspondance). I argue that the connection is not a problem, there is nothing mysterious here and it is given above (by holding the correct metaphor or picture of what's going on between language, world, models, and symbols).

On that basis, I argued in grad school that SCO "solves" (rather, dissolves) the Symbol Grounding Problem by explaining the link between structures (linguistic symbol systems) and data structures allowing us to see that there is no mystery. Only by assuming hard-nosed representationalism do we run into such worries.

Burden of Argument

The main burden though to get this idea off the ground is to establish a convincing explanation and description of one of the basic concepts articulated in the paper:

"one has the phenomenal experience of seeing a brown cup at time one, an orange cup at time two, and a brown cup at time three."

Continuing:

"That gives rise to a specific data structure."

I explain this further:

"There is growing consensus that observed phenomena comes pre-structured (what I call a data structure) and that it is from the structure of the phenomena that one constructs their scientific theories and/or models, not from the phenomena directly. The takeaway idea is that we can organize or regiment our phenomenal experience in many different ways and each of these different ways is what I call a 'data structure.' According to the SCO view, there is no 'correct' way to organize or regiment our phenomenal experience but there may be better ways at least insofar as a particular data structure better satisfies pragmatic criteria (theoretical virtues) toward the end of some cognitive project than the rest."

So, I must give an improved articulation of what I mean by this and what is going on.

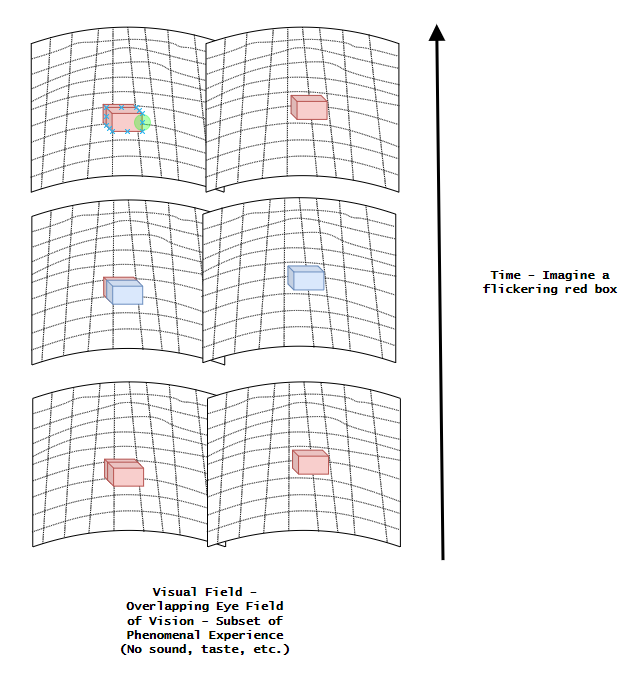

Above, is an improved depiction of what I was describing. It shows the visual experience of seeing a flickering red box.

When we think about the data that's presented to us, we see that it is pre-structured. The box is situated within our field of vision (per Kant and the mind's construction of space and our perception of it) and it is colored. The experience of color is taken to be primitive or basic by many in the qualia debate so I don't feel that this is a particularly controversial claim.

From these structured experiences, we can generate semantic models:

| Time | Color |

|---|---|

| 1 | Red |

| 2 | Blue |

| 3 | Red |

The table above is just such a semantic model (very very simple and crude though it is). Our experiences are pre-structured and from them we may build simple representations of those experiences (models).

Further support from Machine Learning and Artificial Intelligence

AI and ML, for example, demonstrate how data gets clustered and grouped to create stable predictions and classification systems. In other words, what RNN, CNN, and multilayer perceptrons do is demonstrate how neural systems can come to reify or group particular patterns of pixels into "stable concepts" that organize further images fed into the network (classifying them). Here, there is nothing mysterious - only:

- A tremendous amount of discrete informational units.

- For images, collections of pixels constituting images (RGB - e.g. rgb(0-255, 0-255, 0-255)) that form highly likely (statistical) relationships with other pixels.

- Every pixel group's statistical relationship and liklihood to every other pixel group is calculated.

Over time, these singular, atomic, discrete units of information can identity say a "dog" or a "cat" with 80+% accuracy. There is nothing mysterious here, there is the phenomenal data here (the pixels) which are pre-structured into RGB units and image files. We use structures to understand and regiment them.

In these examples:

- There is no conscious being within the computer hardware consciously classifying or organizing concepts, symbols, or data. Merely binary permutations as allowed in programming languages.

- The input data and output data is itself representational. It stands for pictures and colors that are rendered, given an appropriate monitor or output screen, in some fashion according to another programming language.

- There is no homunculus within the computer system that is conscious.

- There are human operators that define the algorithm and code. They do label some of the training set early on.

- Those explicit associations are the only associations that are ostensibly defined.

It is purely "dumb" involving no concepts (only stipulated associations that eventually increase in applicable accuracy), all input data is pre-structured and itself representational, and semantical associations are made within models (the neural nets and taxonomic classifications - e.g. the stipulated associations).

Sellar's and the Myth of the Given

I appeal to no Given but merely what we experience - no sense datum constituting the world of the phenomenal as a kind of ontological or inferential atom (which is, just like Sellar's argued a metaphysical dogma no different than the very a priori metaphysics the empiricists wanted to dispell in the first place):

"Lastly, by 'phenomenal experience' I intend simply the unity of our sensory experience. What the constituents of our phenomenal experience are I leave open here. The only claim that I wish to make about phenomenal experience is that we associate certain items in our theories and structures with certain phenomenal experiences. This should not necessarily be understood as a reductive claim or a claim about the meaning of the items in a structure or theory but rather simply the claim many of the items that constitute a structure are often distinguished by the phenomenal experiences that we associate with them."

The world is presented to us and that presentation is structured. The world is not a "pyramid scheme" (it is not governed by the dogma of foundationalism - which when wed with empiricism becomes the subject of Sellar's argument) and inferences (that is rationality itself) arise from and through structures in the manner described above.

- Kurt Gödel - 14 Posits

- On the Computational Proof for God's Existence

- Comments about philosophy

- My Thoughts

- Default Views

- Another Reply to the Argument from Evil

- Remarks on SCO

- SCO and the Myth of the Given

- Rebranding Some Of My Original Ideas

- The Myth of Privileged Inner Epistemic Access

- Plato's Republic is a Noble Lie

- Regarding Solipsism

- Toolbelt Instrumentalism

- Propositional Propagation

- Some Thoughts on Language

- Fatemakers

- On the Origins of the Concept of Contradiction

post: 12/30/2018

update: 9/2/2019

update: 2/21/2020